Asking students to not use artificial intelligence is like “putting chocolate cake all around the room and saying, ‘don’t eat the chocolate cake,’” says Mr. Jake Moffat, English Co-Department Head and Creative Inquiry teacher. A tool that can craft essays and conduct deep research in seconds, AI has made outsourcing education a greater temptation than ever before. And not just for students; according to Moffat, “teachers do it too.”

Students have to tell their teachers that they “don’t ever use it,” which teachers know is “not true,” Moffat says. Likewise, teachers aren’t always honest about how they use AI in their lesson-planning. Consequently, AI provokes dishonesty on both sides, which Moffat says “erodes the relationship between teachers and students.”

In order to bridge this divide, Moffat and Diana Neebe, Assistant Principal of Instruction and Faculty Development created Exploring AI at the Prep: a communal space for students and faculty to examine the role of AI in education and develop an acceptable usage policy for SHP together.

This is a unique opportunity for Sacred Heart community members to speak openly about their own AI use without consequence and collaborate to address major educational questions at the Prep. Some students, including Oskar Herlitz ‘26, were initially skeptical of the faculty organized initiative on AI, but were compelled to participate once they discovered that “genuine policy changes could result from [their] discussions.”

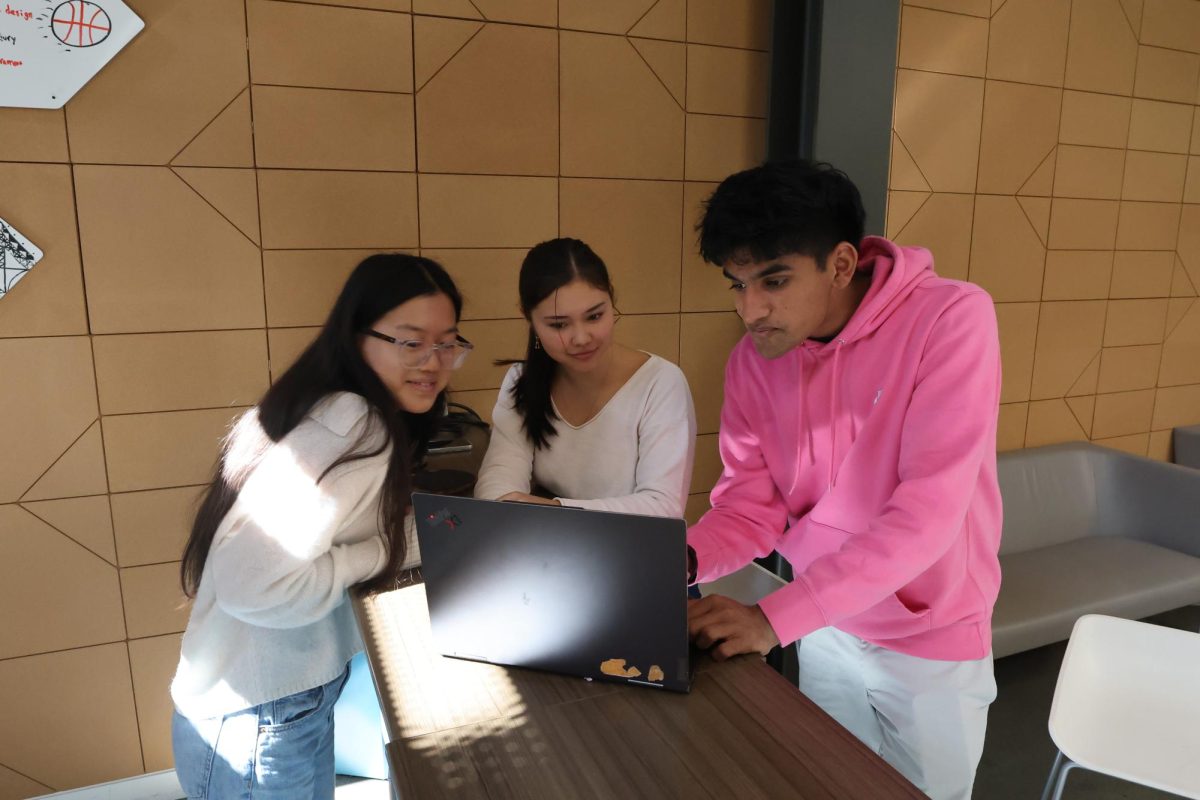

In their first meeting, they held an open forum for students and faculty to share concerns and curiosities around AI. Based on this input, Moffat and Neebe assembled a curriculum with lessons taught collaboratively by groups of students and teachers.

In the beginning, the initiative explored interactions between AI and humans more broadly. The first lesson, taught by Mr. Kevin Morris, a computer science teacher at SHP, and Herlitz, aimed to “give some sense of how AI works to a bunch of people in the room who are not coders,” Morris says. By breaking down the probabilistic decision-making algorithm, Herlitz proved that AI is nothing more than a mathematical tool.

The following week, Mr. Douglas Hosking, an English teacher, alongside Lois Pribble ‘27, Scarlet O’Brien ‘27, and Kendall Chad ‘27, presented on how AI affects human relationships. They demonstrated how AI’s capacity for simulating social interaction could provide significant benefit. For example, Hosking says “if created appropriately and properly,” AI counseling tools could solve the huge problem of access to mental health practitioners. However, they also warned that artificial socializing involves major risks because AI can “make you feel real feelings,” yet it is “distinctly not a person.”

Put together, these first two lessons emphasize the inherent conflict between AI and human beings. From there, the initiative refocused on AI in education. In lesson three, they examined how AI could supercharge learning, and the next week, they analyzed how AI could supercharge teaching.

After laying the groundwork of building trust and understanding amongst students and faculty, the initiative began a three week process called “Stop, Start, Continue.” Teachers from every department worked with small groups of students to compare their curricular intentions with students’ classroom experiences, openly sharing which assignments were meaningful and which felt unnecessary. This, Moffat says, is where “trust and vulnerability get huge.”

These groups aim to flesh out which exercises are “so essential to who we are as children of the Sacred Heart, that we insist on doing [them] even though we don’t have to,” Neebe says. These conversations are pivotal to developing a shared set of guidelines around acceptable AI use.

After thorough discussion on AI usage in education, the initiative will conclude with a meeting discussing the ethics of using AI more broadly. While AI can be very useful or entertaining, Neebe asks, “is it worth all the natural resources we just squandered?” AI consumes major This question is a crucial point of discussion around AI, but it was reserved until the end of the series because labeling AI as unethical from the start would block productive conversation.

Based on the insights gathered from these sessions, students and faculty will work together to set boundaries around AI, diving into specifics like AI’s role in the cognitive and social components of a Sacred Heart education.

This initiative does not have the authority to set school policy, so the solution they come up with won’t become official guidelines. The solution will, however, likely be presented to faculty and staff at the Teacher Symposium in the spring semester. Moffat hopes that “if you get this many committed teachers and committed students, who are putting honest, thoughtful work in, saying this is where we should go, that’s where we’ll go.”